This is Part 4 in the Replacing the AWS ELB series.

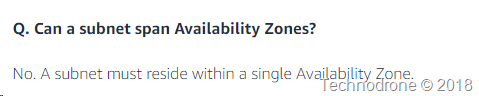

Why does this whole thing with the network actually work? Networking in AWS is not that complicated - (sometimes it can be - but it is usually pretty simple) so why do you need to add in an additional IP address into the loop - and one that is not even really part of he VPC?

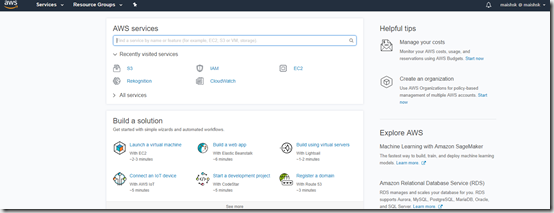

To answer that question - we need to understand the basic construct of the route table in an AWS VPC. Think of the route table as a road sign - which tells you where you should go .

Maybe not such a clear sign after all

(Source: https://www.flickr.com/photos/nnecapa)

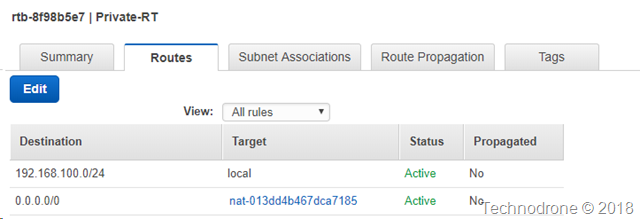

Here is what a standard route table (RT) would look like

The first line says that all traffic that is part of your VPC - stays local - i.e. it is routed in your VPC, and the second line says that all other traffic that does not belong in the VPC - will be sent another device (in this case a NAT Gateway).

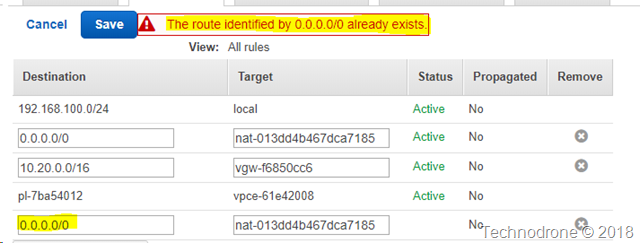

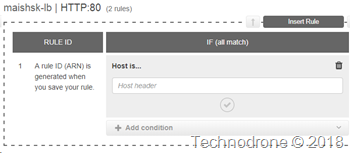

You are the master of your RT - which means you can route traffic destined for any address you would like - to any destination you would like. Of course - you cannot have duplicate entries in the RT or you will receive an error.

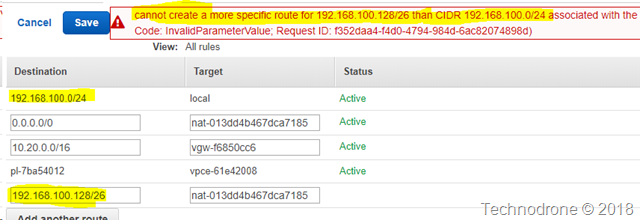

And you cannot have a smaller subset the traffic routed to a different location - if a larger route already exists.

But otherwise you can really do what you would like.

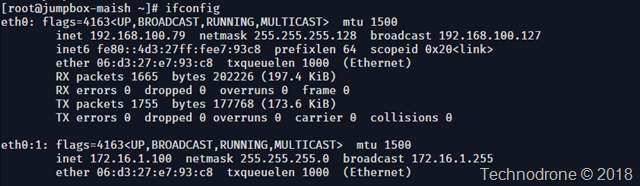

So defining a additional interface on an instance is something that is straight forward.

For example on a Centos/RHEL instance you create a new file in /etc/sysconfig/network-scripts/

DEVICE="eth0:1"

BOOTPROTO="none"

MTU="1500"

ONBOOT="yes"

TYPE="Ethernet"

NETMASK=255.255.255.0

IPADDR=172.16.1.100

USERCTL=no

This will create a second interface on your instance.

Now of course the only entity in the subnet that knows that the IP exists on the network - except the instance itself.

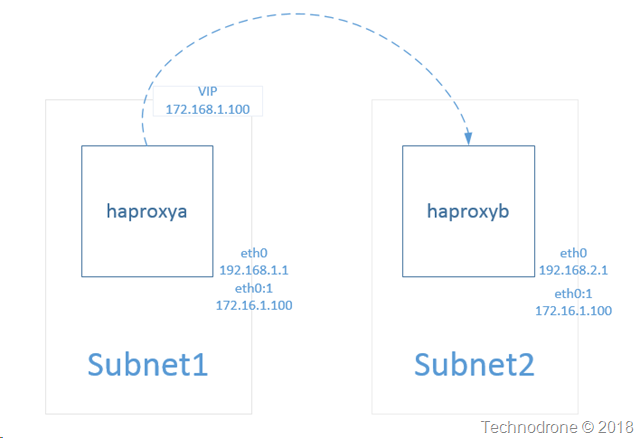

That is why you can assign the same IP address to more than a single instance.

Transferring the VIP to another instance

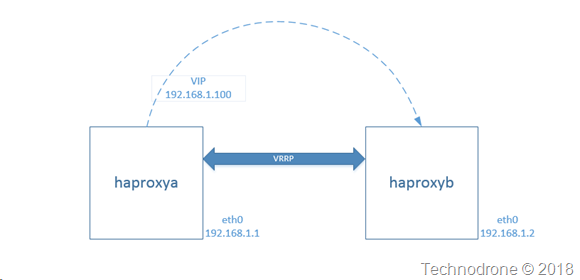

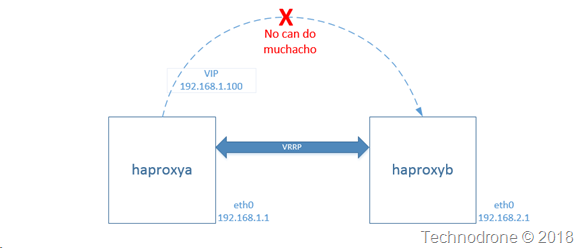

In the previous post the last graphic showed in the case of a failure - haproxyb would send an API request that would transfer the route to the new instance.

keepalived has the option to run a script that execute when the it's pair fails - it is called a notify

vrrp_instance haproxy {

[...]

notify /etc/keepalived/master.sh

}

That is a regular bash script - and that bash script - can do whatever you would like, luckily that allows you to manipulate the routes through the AWS cli.

aws ec2 replace-route --route-table-id <ROUTE_TABLE> --destination-cidr-block <CIDR_BLOCK> --instance-id <INSTANCE_ID>

The parameters you would need to know are:

- The ID of the route-table entry you need to change

- The network of that you want to change

- The ID of the instance that it should be

Now of course there are a lot of moving parts that need to come into place for all of this to work - and doing it manually would be a nightmare - especially at scale - that is why automation is crucial.

In the next post - I will explain how you can achieve this with a set of Ansible playbooks.